Google Unveils Powerful AI Models to Drive the Future of Humanoid Robots.

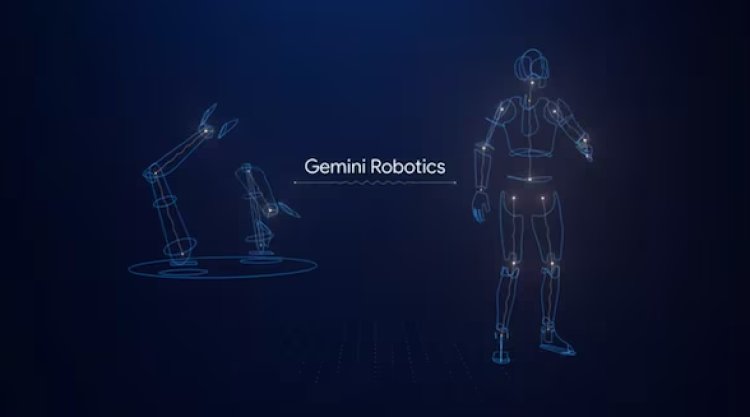

Gemini Robotics and Gemini Robotics-ER are two new AI models developed by Google DeepMind that are based on Gemini 2.0.

Highlights

- To power robots, Google DeepMind introduces new AI models.

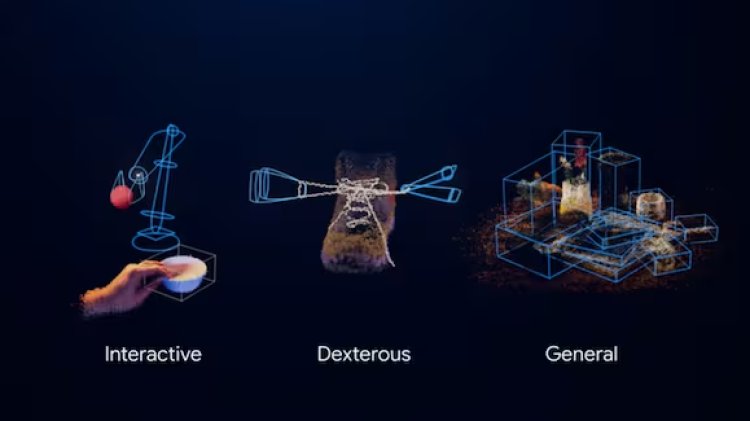

- Three pillars underpin Gemini Robotics' operations: dexterity, interactivity, and generality.

- A robot's comprehension of the 3D environment is improved by the ER model.

Gemini, Google's first AI model, was revealed in 2023. Since then, the business has been trying to improve its models. From image generators to reasoning models, Google's Gemini has it all. The company has now revealed Gemini Robotics and Gemini Robotics ER, its upcoming AI models designed to power robots and enable them to function similarly to humans.

Introducing Gemini AI, the most potent developed by Google, in India to replace ChatGPT.

Google writes on its blog that "to be useful and helpful to people in the physical realm, AI must demonstrate embodied" reasoning, which is the humanlike ability to comprehend and react to the world around us as well as safely take action to get things done." To comprehend these models, let's take a closer look.

How does Gemini Robotics operate and what is it?

In order to directly control robots, Google describes Gemini Robotics as "an advanced vision-language-action (VLA) model that was built on Gemini 2.0 with the addition of physical actions as a new output modality."

Gemini Live launched: Rival to OpenAI's GPT-4o What is it and everything else you need to know

The new model improves on three key areas—generality, interactivity, and dexterity—that Google DeepMind considers critical to building useful robots. Gemini Robotics is more adept at interacting with people and its surroundings, in addition to being flexible enough to adjust to new circumstances. Additionally, it can handle delicate physical tasks like unscrewing a bottle cap or folding paper.

The model can comprehend and react to a greater variety of natural language commands, adapting its behaviour according to your input. Additionally, it continuously observes its surroundings, identifying any modifications to its surroundings or instructions, and modifying its behaviour as necessary. This degree of control, or "steerability," makes it possible to work with robot assistants more effectively in a variety of contexts, including the office and the home.

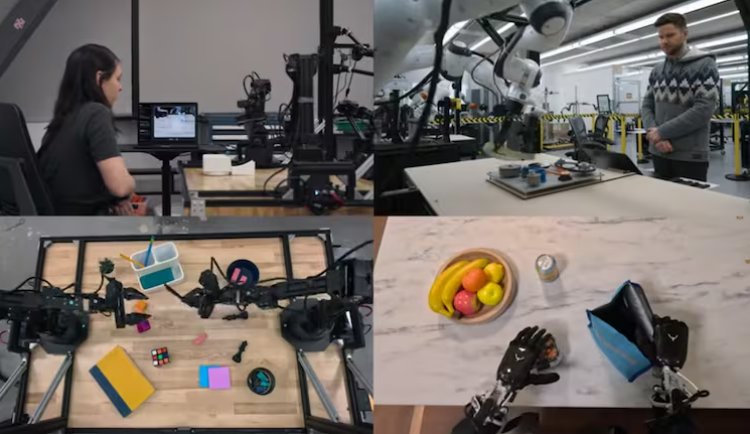

Google also emphasises that Gemini Robotics is made to accommodate the fact that, like people, robots come in a variety of sizes and shapes. "We showed that the model could control a bi-arm platform, based on the Franka arms used in many academic labs, but we also trained the model primarily on data from the bi-arm robotic platform, ALOHA 2," the statement reads.

Gemini Robotics ER

Google has also launched Gemini Robotics ER in addition to Gemini Robotics. This model allows roboticists to integrate Gemini with their current low-level controllers and enhances Gemini's perception of the world in critical ways for robotics, especially in spatial reasoning.

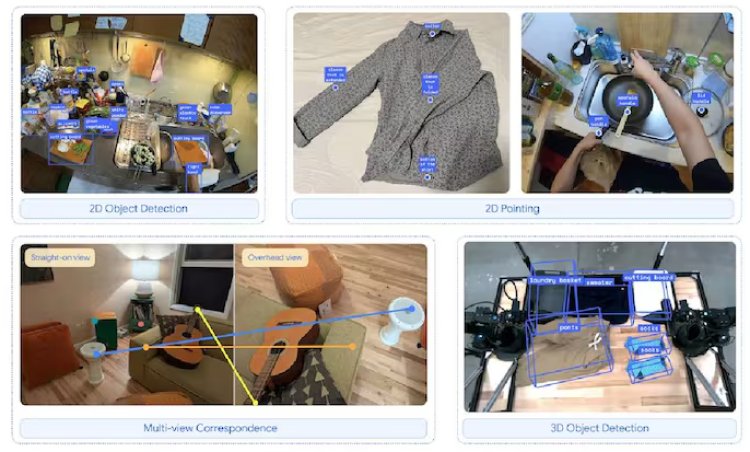

The pointing and 3D detection capabilities of Gemini 2.0 are greatly improved by Gemini Robotics-ER. Gemini's coding skills combined with spatial reasoning allow it to swiftly create completely new functions. For instance, the model can automatically figure out how to hold a coffee mug with two fingers on the handle and make a safe route to get to it.

Gmail is now receiving Gemini AI This is how you apply it.

All of the tasks required to immediately control a robot can be completed by Gemini Robotics-ER, including perception, state estimation, spatial understanding, planning, and code generation.

Google is making Gemini live AI assistant available for free to all Android users: How to access